For Dataverse (Dynamics 365)

DCS for Azure provides pre-built Azure Data Factory (ADF) pipeline templates for discovering and masking sensitive data in your Dynamics 365 (D365) environments. These pipelines apply masking rules directly to data within Dataverse tables to ensure compliance and data privacy.

Key components

The following are the key technologies involved:

- Dynamics 365 (D365): A suite of enterprise applications, including CRM and ERP, built on Microsoft Dataverse.

- Dataverse: A cloud-based data platform that stores and manages the data used by D365 applications.

- Azure Data Factory (ADF): A data integration service that orchestrates the movement and transformation of data.

- Delphix Compliance Services (DCS) for Azure: A solution that uses ADF pipelines to automate data masking for D365 and Dataverse environments.

D365 runs on Dataverse, and the pipelines created by DCS for Azure use ADF to apply masking rules directly to sensitive data within Dataverse tables.

Pipelines

DCS for Azure includes pipelines that connect ADF with Dynamics 365:

- Dataverse to Dataverse Discovery Pipeline – Scans Dataverse tables to discover and catalog sensitive data, storing metadata in Azure SQL.

- Dataverse to Dataverse Masking Pipeline – Masks sensitive data by moving it from a source D365 environment to a target environment.

These pipelines use ADF data flows to extract tables from the source Dataverse instance, apply masking rules, and load the masked data into a target Dataverse instance. Metadata stored in Azure SQL defines which tables to mask and the rules for each column. REST services connect ADF to DCS for Azure to coordinate masking operations. The pipelines support both conditional and unconditional masking and include mechanisms for error handling and auditing.

The pipelines use the following framework components:

- REST API: Connects ADF with DCS for Azure to execute masking workflows and update status information.

- Azure SQL: Hosts metadata that defines masking rules, mappings, and execution logs.

- Dataverse connectors: Move data between the source and target environments.

Using these components together facilitates secure, automated, and scalable masking operations across Dynamics 365 environments.

Prerequisites

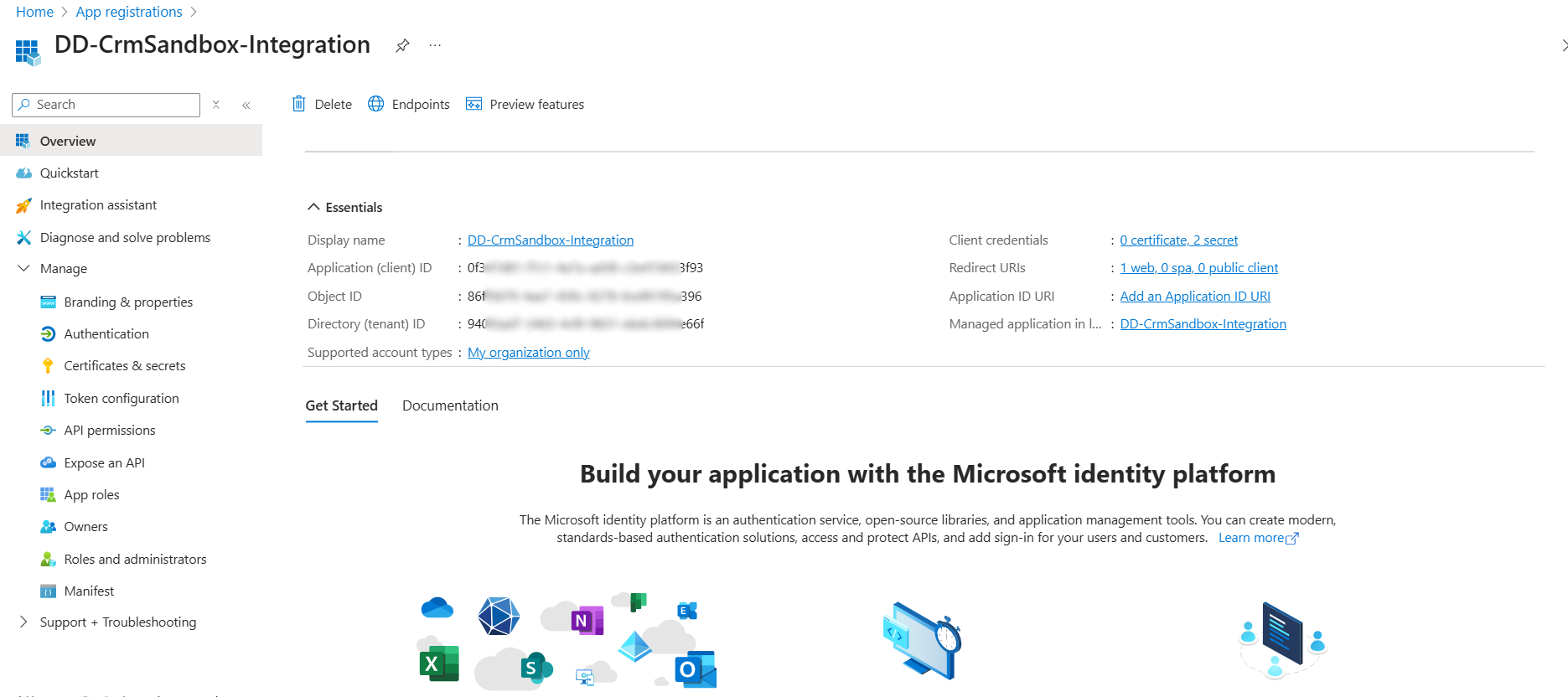

Create an App Registration for Dataverse in Azure Portal

-

Sign in to Azure

- Go to https://portal.azure.com.

- Log in with your organizational Azure Active Directory (Azure AD) credentials.

-

Register a new application

Generate a Client Secret

- Inside the registered app, go to Certificates & secrets.

- Click New client secret.

-

Fill in the required details:

- Description:

Sandbox access key - Expires: Choose a suitable expiration period (for example, 6 months or 12 months).

- Description:

- Click Add.

- Copy and save the Value of the client secret. You won’t be able to see it again after you leave this page.

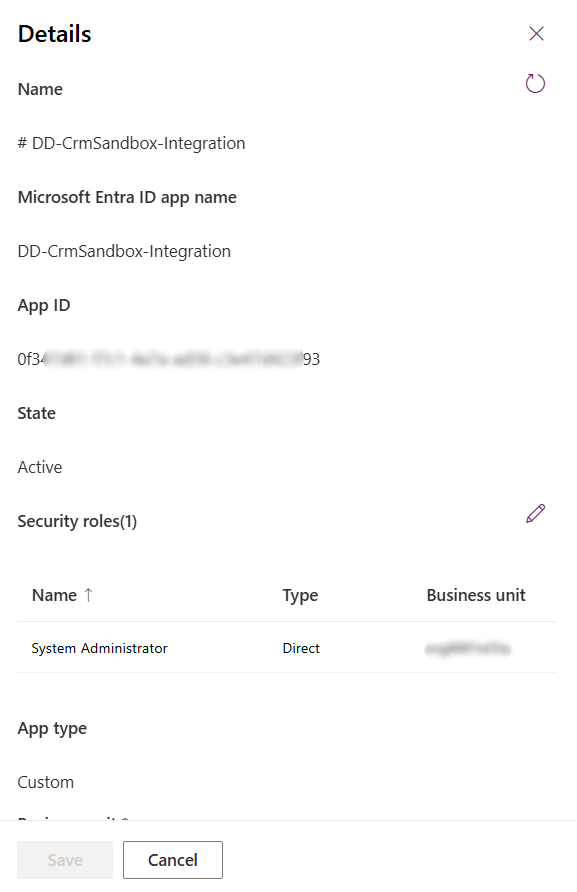

Add App ID in Dataverse Sandbox (Power Platform Admin Center)

-

Go to the Power Platform Admin Center:

- URL: https://admin.powerplatform.microsoft.com

- Navigate to Environments.

- Select your sandbox environment.

-

Go to Dataverse Admin Settings:

- Click Settings → Users + Permissions → Application Users.

- Click New App User.

-

Add the app user:

- In the App Registration field, search using the Application (client) ID you copied from Azure.

- Set the following:

- Business Unit

- Security Role: System Administrator

- Click Create.

Once this setup is complete, you can proceed to create the following linked services in Azure Data Factory using the registered app credentials:

Troubleshoot common issues

If you encounter problems when importing or running the pipelines, use the following table to resolve common issues:

| Issue | Cause | Solution |

|---|---|---|

| Pipeline fails to import into ADF | Incorrect file format or missing linked services. |

|

| Masking job fails immediately after starting | Missing or incorrect pipeline parameters. |

|

| Dataverse connection errors | Linked service credentials or permissions are invalid. |

|

| Masking results are incorrect or incomplete | Metadata mapping tables are out of sync with the Dataverse schema. |

|

| Pipeline performance is slow | Default batch size is too small for large datasets. |

|