Parquet structure

This guide provides an overview of handling Parquet files for data masking. It includes defining Parquet schemas, understanding nested structures, and using Parquet column paths to accurately target data fields for masking.

Understanding Parquet structure

Parquet is a columnar storage format, storing data by columns rather than rows, optimizing compression and query performance. It is a binary format and can only be read using Parquet parsers or readers. A parquet file typically has a file header and footer containing file metadata such as the version, schema, row-group sizes, encoding and compression codecs, statistics, dictionaries, etc. The file body contains the row groups, column chunks, and pages that store the actual data.

Definitions

Schema: A Parquet schema defines the structure of the stored data, including field types, repetition rules, and nesting levels. The schema can include primitive types - INT32, INT64, BOOLEAN, BINARY, FLOAT, DOUBLE and complex types - LIST, MAP, STRUCT.

Groups and Fields: Groups represent structured objects (similar to JSON objects or XML elements), and fields define individual data points within a group (equivalent to JSON fields or XML nodes).

Repetition Level and Definition Levels: Repition level tracks the repeated (LIST) elements inside a nested structure, identifying whether a value starts a new list or continues an existing one. The definition level tracks nullability in a nested or optional column, i.e., it represents how many levels of nesting exist before a value is defined (non-null).

Hierarchical structure

On a higher level, the Parquet hierarchical structure consists of three primary components:

Row Groups: Rows group is a horizontal partition of data (collection of rows), containing column data for a subset of rows. Each row group can be processed independently, allowing parallel processing and reading parts of data without loading the entire dataset into memory.

Columns: Each row group contains column chunks, which store all values for a single column. Each column is stored separately, allowing column-level compression and encoding. The query engine can selectively query required columns, reducing computational overhead and memory usage.

Pages: Each column chunk is divided into Pages containing encoded and compressed data. There are three types of pages: data pages (actual column values), dictionary pages (dictionaries for repeated values), and index pages (optional, store row indexes for faster lookups).

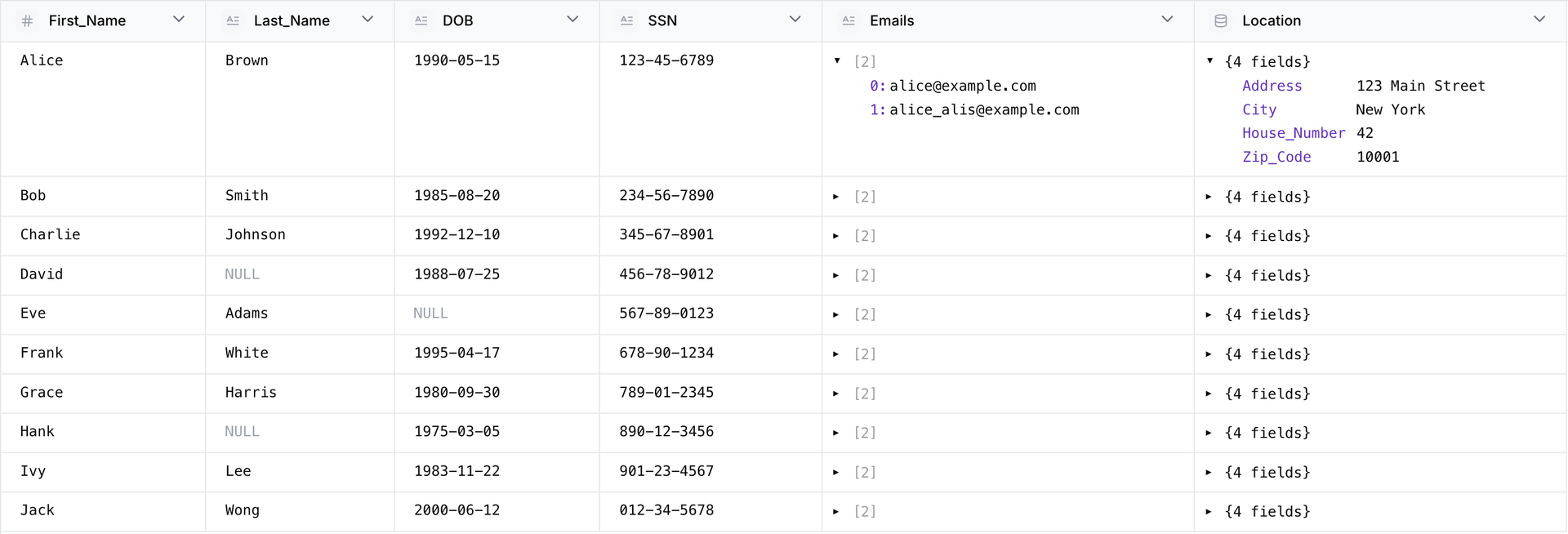

Parquet example (Tabular format)

Understanding ColumnPath

In Parquet, a ColumnPath is the fully qualified name of a column, representing its location within a hierarchical (nested) schema. It is used to reference specific columns in structured or nested datasets.

In the context of the masking engine (specific to parquet-java libraries):

- For flat schemas, column paths are simply the column names (e.g.,

First_Name). - For nested schemas, column paths represent the full path to a nested field, using a slash (

/) separator to indicate hierarchy (e.g.,Location/Address). - For list schema elements, column paths end in

<path>/list/element(e.g.,Emails/list/element).

ColumnPath example

First_Name

Last_Name

DOB

SSN

Emails/list/element

Location/Address

Location/CityLocation/House_Number

Location/Zip_Code

Data types in Parquet

This section describes how Apache Parquet stores data on disk and how data types are defined within the format. Understanding Parquet’s data type layout is essential for applying appropriate masking algorithms to Parquet columns.

Parquet is a columnar storage format that utilizes two layers of data typing.

- Physical types (Primitive types)

- Logical types (Extended types)

Physical types

Parquet defines a limited set of physical (primitive) types that determine how data is stored on disk at the binary level. These types form the foundational structure for all Parquet data.

The table below outlines each physical type, the memory footprint it occupies, and how the Java-based Parquet reader interprets it within the context of the Delphix Continuous Compliance engine.

|

Primitive Type |

Storage Size |

Description |

Java Mapping |

|---|---|---|---|

|

BOOLEAN |

1 bit (packed) |

True or False |

boolean |

|

INT32 |

4 bytes |

32-bit signed integer |

int |

|

INT64 |

8 bytes |

64-bit signed integer |

long |

|

INT96 |

12 bytes |

Legacy nanosecond timestamp (used by Impala or Hive engines) |

Binary (decoded to LocalDateTime) |

|

FLOAT |

4 bytes |

IEEE 754 single-precision float |

float |

|

DOUBLE |

8 bytes |

IEEE 754 double-precision float |

double |

|

BYTE_ARRAY / BINARY |

Variable |

Length-prefixed binary blob (0–2³¹-1 bytes) |

Binary / byte[] |

|

FIXED_LEN_BYTE_ARRAY |

N bytes (fixed length) |

Fixed-length binary blob |

Binary / byte[] |

Logical types

Parquet logical types (represented in code via LogicalTypeAnnotation or the older ConvertedType annotate the core set of physical (primitive) types with semantic meaning. These annotations do not alter the underlying storage format on disk; instead, they instruct Parquet readers to interpret the stored bytes in a specific way.

For example, a logical type might indicate that a raw byte sequence should be interpreted as a timestamp, a fixed-length byte array as a UUID, or an integer as a decimal with scale 2. This semantic layer ensures data is read and processed correctly by consuming applications.

It’s important to note that some logical types support only specific masking algorithms, and a few are currently not supported for masking. Refer to the table below for masking support details:

|

Logical Type |

Physical Storage |

Description |

Supported Masking Algorithm |

|---|---|---|---|

|

STRING |

BYTE_ARRAY or FIXED_LEN_BYTE_ARRAY |

UTF-8 encoded text |

Supports string-based algorithms |

|

MAP |

3-level GROUP |

Key→value pairs (repeated key_value groups) |

Supported |

|

LIST |

3-level GROUP |

Ordered collection of elements |

Supported |

|

ENUM |

BINARY |

One of a predefined set of string values |

Supports string-based algorithms |

|

DECIMAL |

INT32, INT64, or FIXED_LEN_BYTE_ARRAY |

Fixed-precision number with (precision, scale) |

Supports only numeric based algorithms |

|

DATE |

INT32 |

Days since Unix epoch |

Supports only numeric based algorithms |

|

TIME |

INT32/INT64 + unit (MILLIS/MICROS/NANOS) |

Time since midnight (with optional UTC adjustment flag) |

Supports only numeric based algorithms |

|

TIMESTAMP |

INT64 + unit (MILLIS/MICROS/NANOS) |

Instant since Unix epoch (with optional UTC adjustment flag) |

Supports only numeric based algorithms |

|

INTEGER |

INT32/INT64 |

Integer with explicit bit-width and signedness |

Supports only numeric based algorithms |

|

JSON |

BYTE_ARRAY |

JSON document as text |

Supported |

|

BSON |

BYTE_ARRAY |

BSON document |

Unsupported |

|

UUID |

FIXED_LEN_BYTE_ARRAY(16) |

128-bit universally unique identifier |

Supported. Since UUID is hexadecimal value, consider algorithms reflecting valid UUID after masking. |

|

INTERVAL |

FIXED_LEN_BYTE_ARRAY(12) |

Hive-style interval: months (4 bytes), days (4 bytes), milliseconds (4 bytes), little-endian |

Unsupported |

|

FLOAT16 |

FIXED_LEN_BYTE_ARRAY(2) |

IEEE 754 half-precision (16-bit) floating point |

Unsupported |

|

TIMESTAMP |

INT96 |

Legacy nanosecond timestamp |

Supports only Date based algorithms with only date format: |

Algorithms and masking parquet

Many storage engines—including Apache Hive, Apache Drill, AWS Athena/Redshift Spectrum, Presto/Trino, DuckDB, Delta Lake, and others—use Parquet files as their underlying storage format. When implementing data masking at the storage layer, it is essential to follow a schema-aware and type-sensitive approach.

To apply masking effectively to Parquet data:

- Inspect the generated Parquet schema.

- Select masking algorithms based on the physical type of the column.

- Ensure that masked outputs confirm to the original Parquet type definitions and any associated logical annotations.

TIMESTAMP or DECIMAL).Example scenario: If the Parquet schema defines a column with a logical type of timestamp, check the underlying physical type:

- If the type is

INT96, use a date-based algorithm such asDateShift. - If the type is

INT32orINT64, use a numeric masking algorithm.

Memory considerations for Parquet data masking job

A row group is the smallest partition of data loaded into memory when reading a Parquet file. The size of a row group can range from a few megabytes to several gigabytes.

Profiling job: The minimum memory allocation must be at least equal to the size of the largest row group in the file.

Masking job: Since masking involves reading and writing simultaneously, the minimum memory allocation must be twice the largest row group size. This ensures that heap memory can accommodate row groups from both the input and output files.

Note that memory estimations based on row groups provide a ballpark range, however, the actual job may require additional memory due to other processing operations.

Parquet masking limitations

In a parquet file, each column chunk and different row groups of the same column may use different compression codecs. If the masking engine encounters such a file, the masked output file is written without compression, using the UNCOMPRESSED codec.

Writing a new masked parquet file uses the parquet file writer’s default properties. As a result, some properties and statistics from the original file may not be carried over to the new masked file.

For a Parquet file execution-component section, Bytes Processed represents the total data written. However, since the Parquet file metadata size cannot currently be calculated, the Bytes Remaining in the execution-component may show a non-zero value (i.e., metadata size) even after masking is complete.